|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Modern research efforts concerned with animal behavior rely heavily on image and video analysis. While such data are now quick to obtain, extracting and analyzing complex behaviors under naturalistic conditions from digital imagery is still a major challenge. In this study, we introduce a novel system for imaging and analyzing the feeding behavior of freely-swimming fish larvae, as filmed in large aquaculture rearing pools, a naturalistic environment. We first describe the design of a specialized system for imaging these tiny, fast-moving creatures. We then show that an analysis pipeline based on action classification can be used to successfully detect and classify the sparsely-occurring behavior of the fish larvae in a curated experimental setting from videos featuring multiple animals. Additionally, we introduce three new annotated datasets of underwater videography, in a curated and an uncurated setting. Finally, we share the methods used and insights gained during the training and evaluation of models on low sample sizes and highly imbalanced datasets. As these challenges are common to the analysis of animal behavior under naturalistic conditions, we believe our findings can contribute to the growing field of computer vision for the study and understanding of animal behavior. |

|

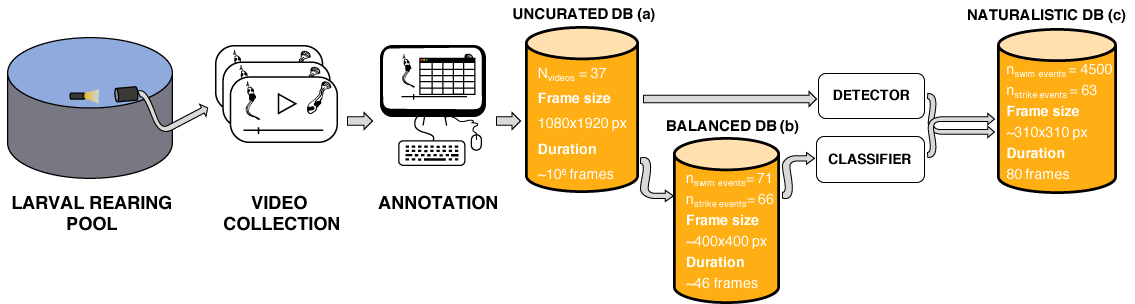

| An overview of our workflow from data acquisition, through data annotation & curation to data analysis. |

We are interested in detecting larval fish feeding behavior in the wild.

This behavior is crucial to their survival and has never before been directly imaged outside the laboratory.

In order to do this we tackle 3 challenges:

|

|

| Two examples from the Balanced dataset, a strike (left) and a swim (right). |

Our goal is to detect feeding strikes of larval fish (left) in untrimmed videos.

To do this, we decided to take an action classification approach to distinguish between swimming (right),

and striking behavior (left).

We present a total of 3 datasets, one uncurated and two curated:

|

|

| Two examples from the Naturalistic dataset, a strike (left) and a swim (right). |

|

|

|

|

|

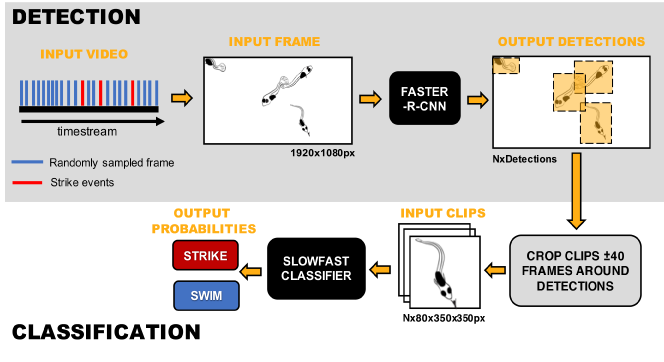

| Visualization of our analysis pipeline and the curated experiment. |

|

To detect feeding strikes of larval fish we take a detection followed by action classification approach.

We trained a

Faster-R-CNN using Detectron2

to detect our fish. We also trained a

SlowFast

action classifier using PyTorchVideo

to classify the fish's behavior into one of two classes 'swim' or 'strike'.

We test this pipeline in a curated experiment - we sample the frames where we know strike occurs, and additional frames where we know strikes do not occur. To simulate a more natural ratio of events we sample roughly ~10 times more frames without strikes. For each frame sampled we applied the detector, followed by the action classifier. By doing this for the 11 videos from the uncurated dataset with the highest strike rates we created the Naturalistic dataset. The code for training the SlowFast model, including some custom data augmentations, and for this experiment will be available in our repository soon. |

|

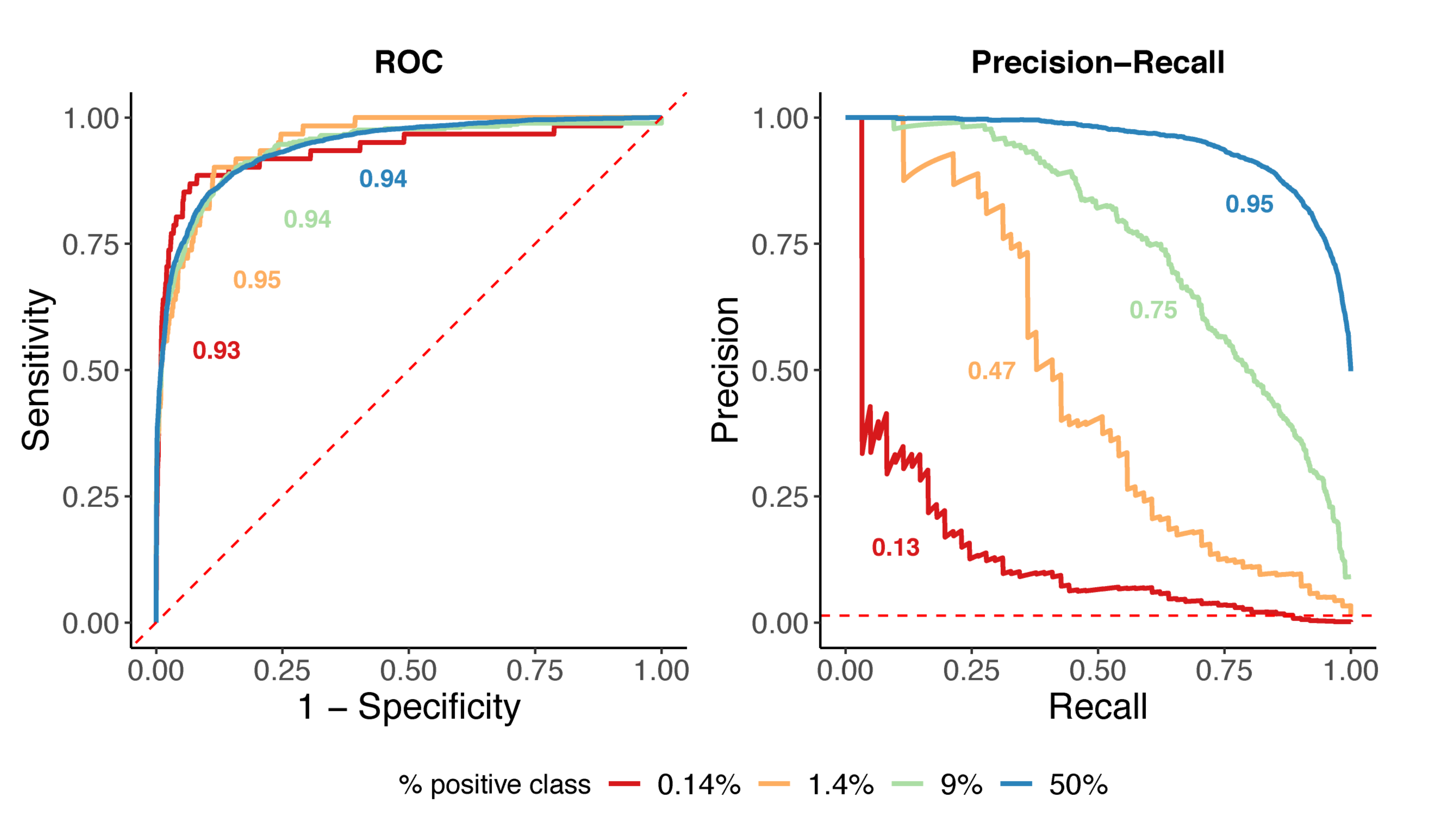

| Results from our simulation study showing the performance for the performance of the same classifier varies greatly under different data imbalances. |

|

The fact that our events of interested are extremely rare results in a very small sample size and high data imbalance.

ROCs are known to be overly optimistic for imbalanced data.

PRCs are a popular alternative but it is less known

that they are sensitive to the level of data imbalance in data, even the same classifier can produce dramatically different results.

This is crucial when taking a trained classifier out to the wild, where feeding strikes are much more

sporadic than in our curated datasets.

We developed a simulation scheme that enables us to assess what performance might be under small sample size at different levels of data imbalance and with different classifier qualities. This is an R code utilizing the precrec for ROC and PRC calculations, you can find it in our repository. |

|

Pre-print on biorxiv. |

Questions? |

Acknowledgements |